Designing a chatbot students can trust, especially during stressful moments.

Sheridan’s Chatbot Supports 3,000+ Student Inquiries Each Term.

Summary

I led UX Research and Conversation design for Sheridan College’s transition from Comm100 to ServiceNow, improving chatbot clarity, intent matching, and student support.

Team

1 UX Researcher (Francine Arabela Paguio), 1 Supervisor (Christopher Kovacs)

My Role

UX Researcher & Designer ~ Responsible for half of each phase

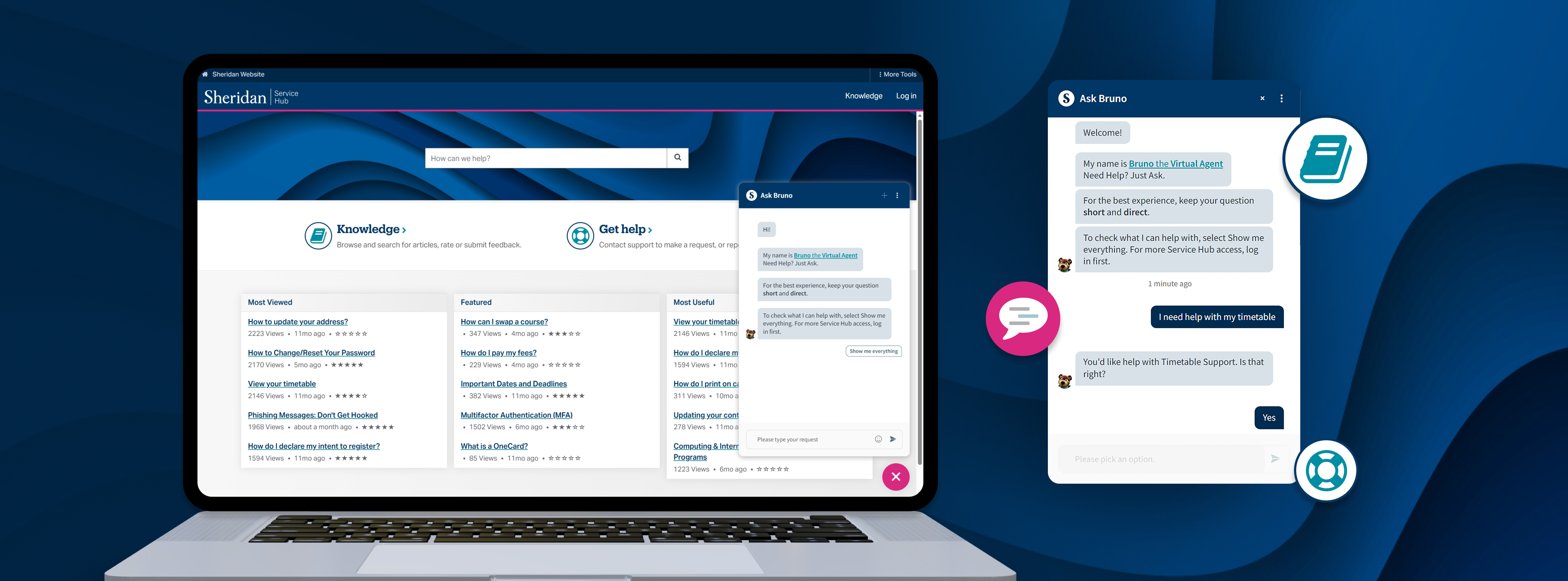

Mockup of the Sheridan College Student Services hub including chatbot interface.

Problem Space

What Was The Problem?

Questions Were Left Unanswered

Students relied on the chatbot for academic, financial, and emotional support, but unclear expectations, overlapping topics, and weak fallback responses led to frustration and unanswered questions.

Student fails to receive an answer from the previous comm100 chatbot interface.

Impact

Real results for Sheridan students.

Elevated Experience, Measurable Change.

covering previously unmet conversational needs

with clearer, research-backed answers

and enhanced with concise, empathetic language, hierarchy, and personalization 3× through iteration

Research Overview

Discovering What We Need To Include In Our Next Iteration

How Did We Gather Data?

Explored ServiceNow and conversation design principles through courses, articles, competitive analysis, testing the platform (NLU & LLM), and academic journals.

Conversation Design Principles

Google skills platform used to complete the Conversation Design Fundamentals course.

Visit Google Skills Website

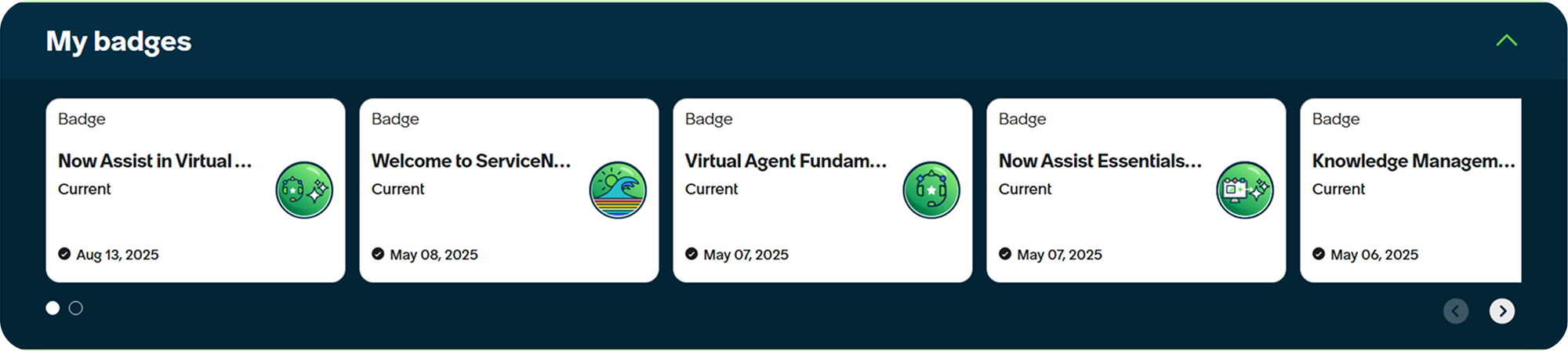

Learning About ServiceNow

Badges earned during the completion of ServiceNow courses involving Now Assist Virtual Agent Chatbot.

Visit ServiceNow University Website

Competitive Analysis

SAVY

TMobile

SAM

Rodney

SAVY

Strengths

Prompts Provided

SAVY by York University provides a friendly greeting experience with default prompts to anticipate student needs.

Weaknesses

Long Response

The AI generated response often bombards the student with a lengthy and overwhelming amount of information.

Historical Data Analysis

What Previously Left Student Dissatisfied?

Investigating Old Chatlogs Provided Clues.

Reading through 102 old chatlog conversations, specifically ones marked with negative sentiments, was the most effective way to understand students, giving us concrete evidence on how they commonly interact with the chatbot.

Real student inquiries attempting to connect to a live support agent.

Synthesis

From All Our Research, What Is Prominent?

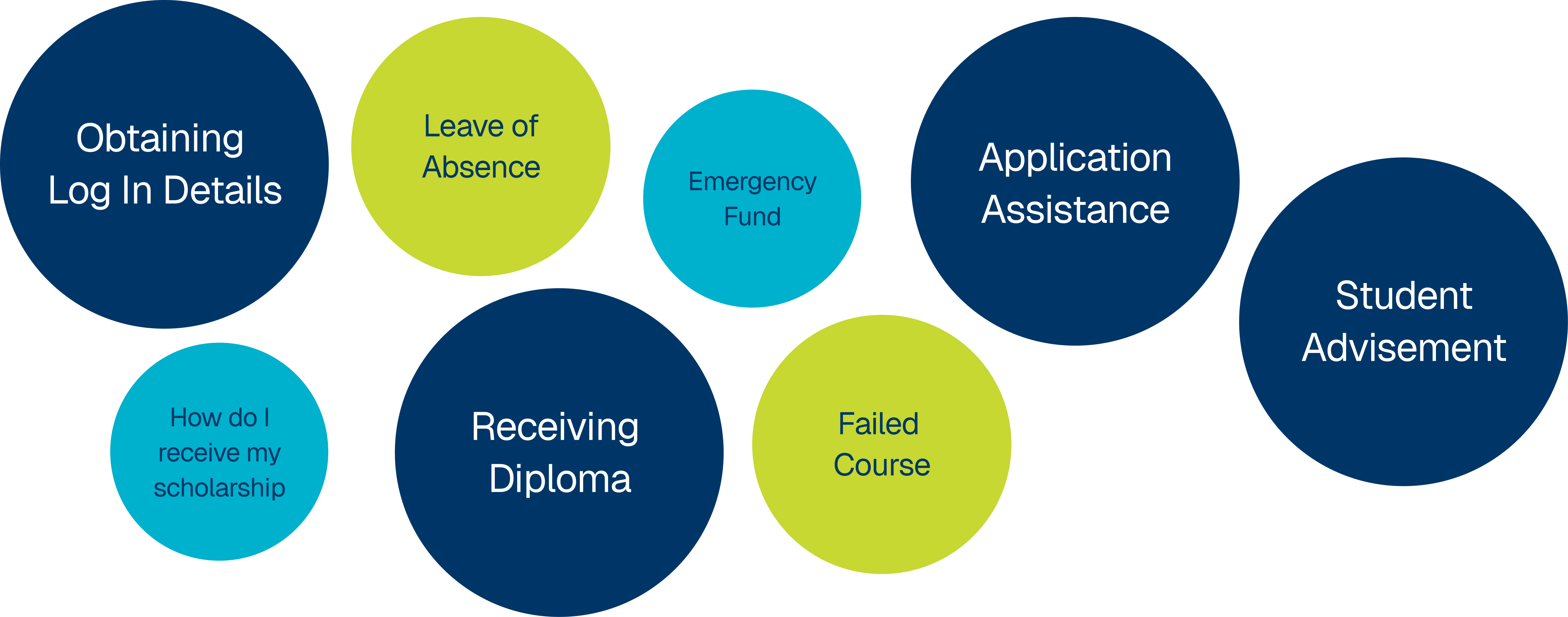

Most Common Topics Searched Among Student Chatbot Requests

Bar graph illustrating most common topics searched by 102 students upon reviewing chats from January – June 2025.

Keeping Track Of What Topics To Change And Add

As we went through chats, we noted down topics that needed to be changed or added to improve the chatbot's effectiveness.

Data visuzalation showing which new topics were added and their prevelance in chatlogs by the size of the circle.

Marking Down Insights For The Conversation Redesign

The research gathered from scholarly articles, our courses, and other online resources was compiled to extract valuable insights for redesigning the chatbot conversations.

Insights

Notable Usability Findings

Information gaps within topic

Information gaps in topics cause students to miss out on their answers even if they are matched to the correct general topic

Overlapping topics

Overlapping topics can confuse students and dilute the chatbot's effectiveness.

Existing topics don't come up

Even if the right topic exists to answer their questions, students may not be matched to it effectively.

No backup message

There is no fallback message or response to time sensitive topics, leaving the student without guidance.

Unclear student expectations

There is a general misunderstanding among students regarding the chatbot’s functionality which can lead to more frustration.

Shuts down from small talk

The chatbot tends to shut down or become unresponsive when engaged in small talk, limiting its conversational capabilities.

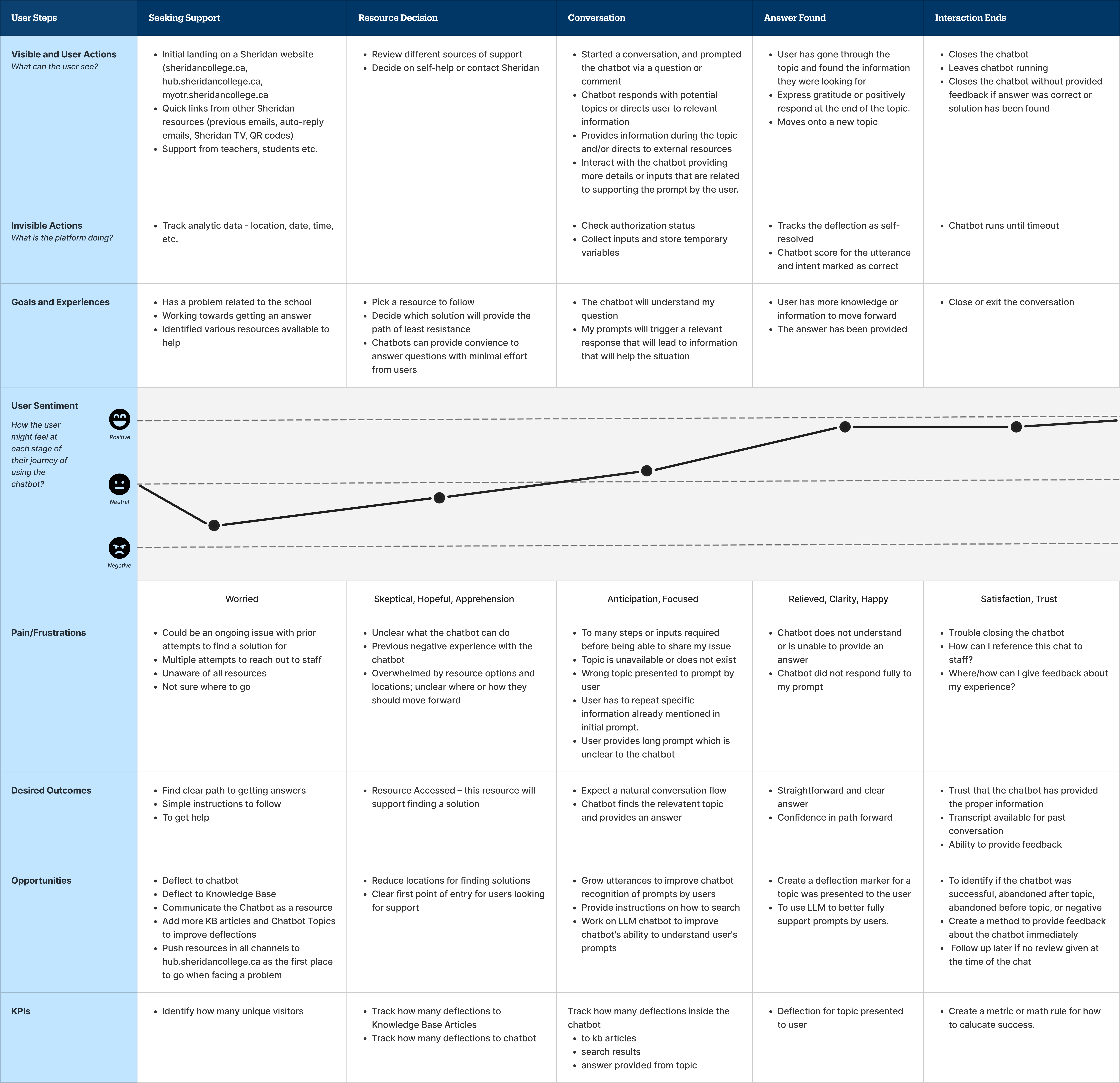

User journey mapping

What Does A Positive Student-Chatbot Interaction Look Like?

Uncovering Student Pain Points And Opportunities By Following Their Footsteps

Tracing the student journey from seeking support to ending the interaction to identify key pain points and opportunities for improvement in a single chatbot flow. We implemented a system in ServiceNow to calcuate the chatbot's success after this journey.

Sceenshot of our user journey map. Student starts by feeling worried and ends up feeling satisfied.

Conversation Redesign

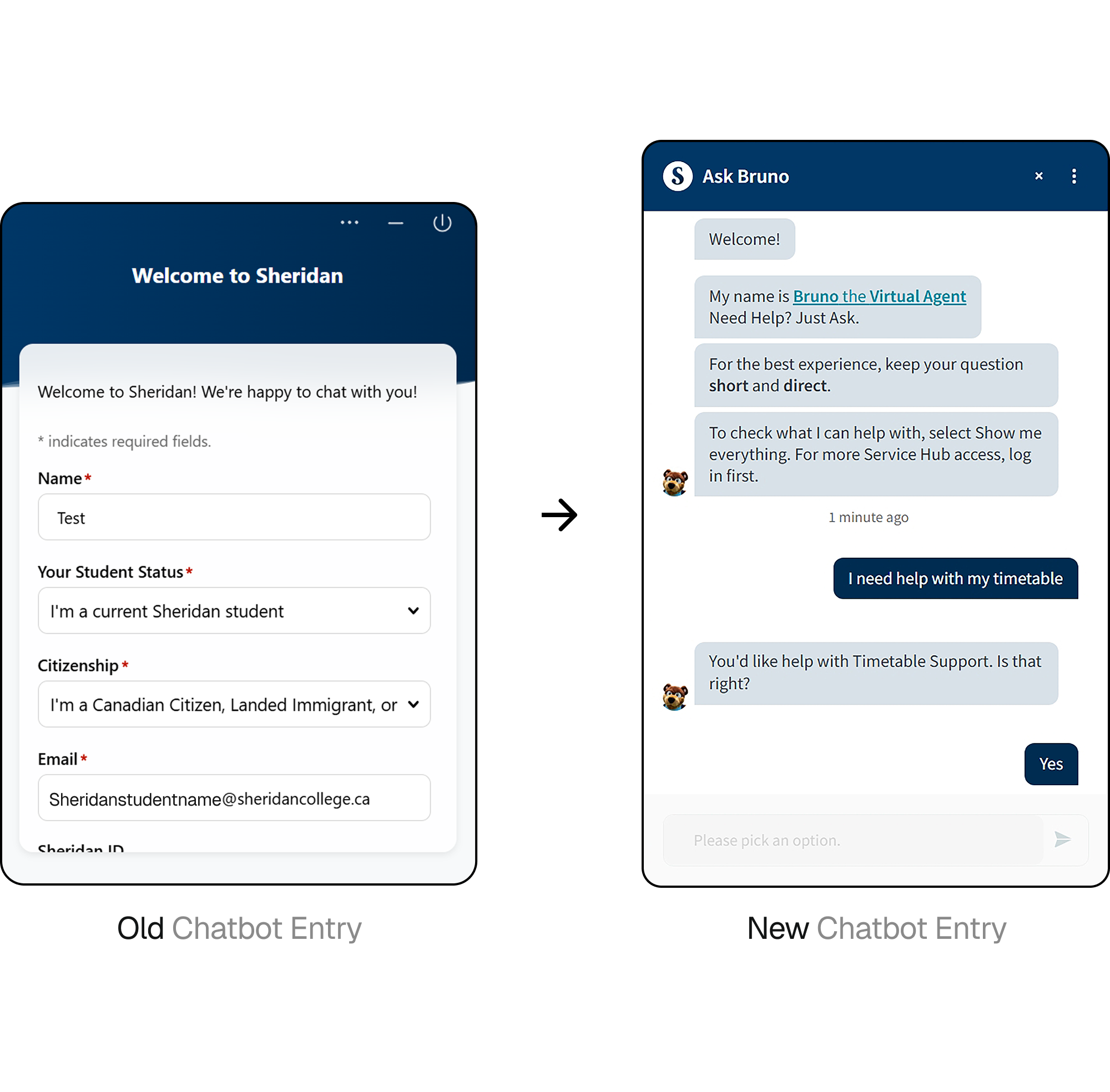

Our New And Improved Greeting

Setting Expectations From The First Message

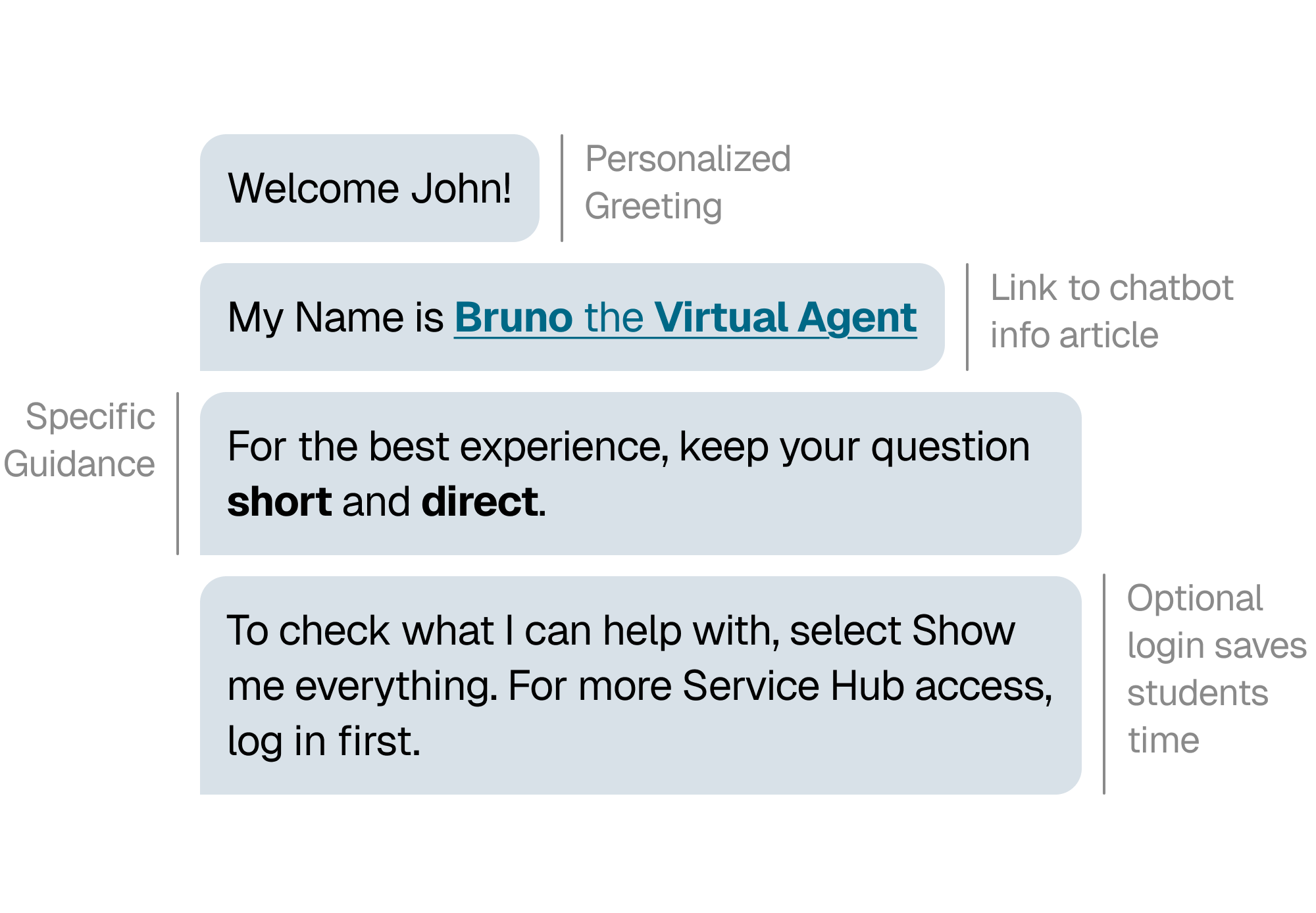

The chatbot greeting often created friction and confusion before users could even ask a question, so we reworked it to remove barriers and set clear expectations. Manual login was replaced with Single Sign-On, and a knowledge base guide was introduced to explain how the chatbot works and how to get the best results. This redesign helped students enter the experience faster, understand system capabilities upfront, and reach relevant answers with less frustration.

Screenshot of old and new greeting conversation.

Before: Greeting

The greeting conversation for the old chatbot.

After: Greeting

The greeting conversation for the new chatbot involving improvements.

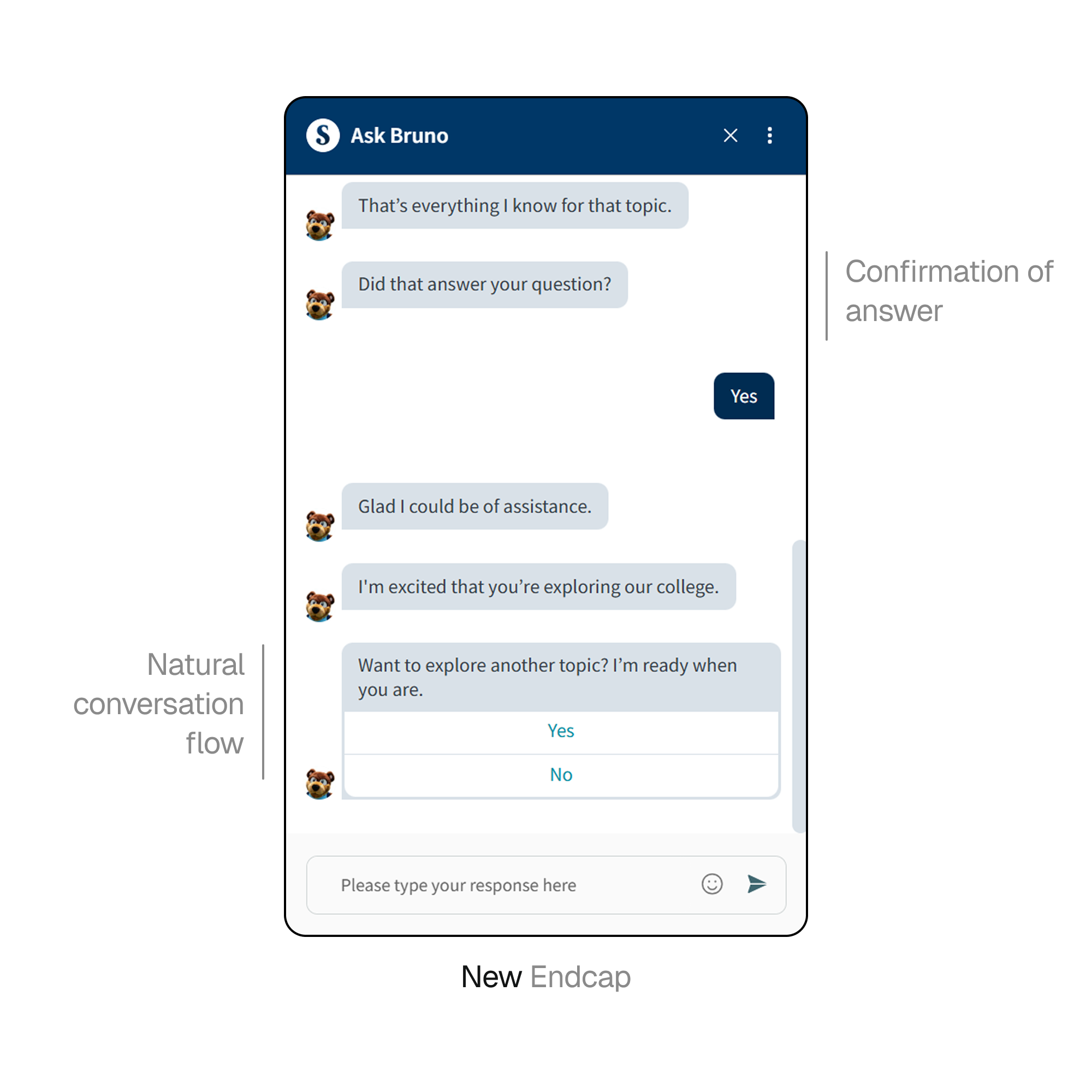

Enhancing The Endcap

Closing The Loop With Clarity And Empathy

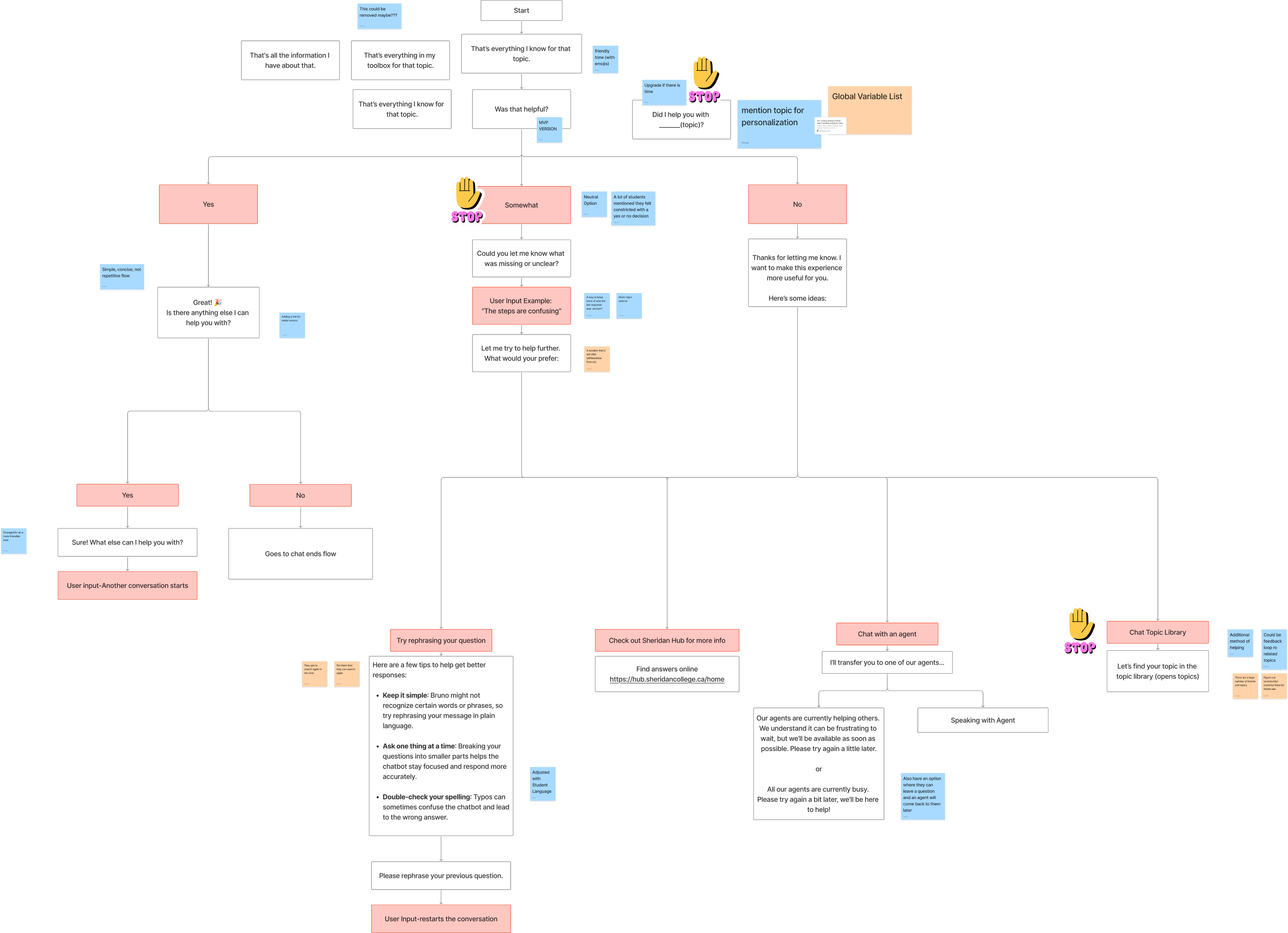

The endcap is the moment where trust is either reinforced or lost, so I redesigned it to clearly confirm understanding and offer next steps. Using research-backed UX writing principles, I replaced long, instructional copy with concise, empathetic language and meaningful fallback options. The final iteration reduced cognitive load (360 → 219 words in the reword prompt) while improving emotional support, personalization, and conversation closure.

Screenshot of redesigned endcap.

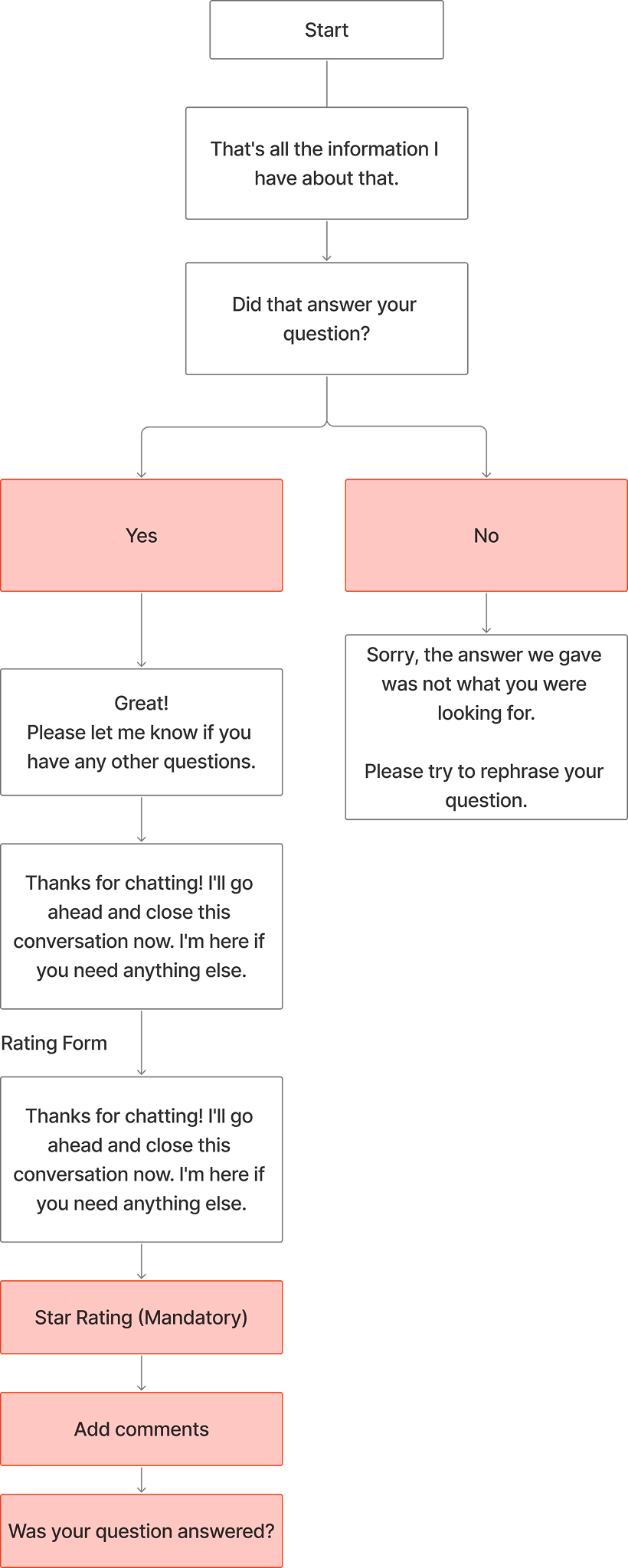

Original Endcap Flow

Original endcap conversation flow.

Drafting Improvements

Screenshot of the flowchart we used to ideate solutions for the endcap, ended up improving by: adding friendly language, shortended content, additional fallback article, and personlization based on student authorization.

Tradeoff

LLM Vs. NLU Decision

Choosing Reliability Over Novelty

What’s the Difference?

LLM (Large Language Model):

Generates dynamic responses using AI, allowing it to interpret complex, open-ended prompts.

NLU (Natural Language Understanding):

Matches user input to predefined intents and responses using structured training data.

LLM (Now Assist): Pros & Cons

Pros

- Can respond even when no exact topic exists

- Handles long, complex, or highly personalized prompts

- Interprets conversational language more flexibly

Cons

- Less control over tone, length, and structure

- Higher risk of unexpected or off-topic responses

- Topic overlap and inconsistency required more iteration time before launch

NLU: Pros & Cons

Pros

- High control over UX writing, tone, and response length

- Predictable, rule-based responses reduce risk

- Easier to align content with institutional policies and accuracy requirements

Cons

- Limited to predefined intents and topics

- Struggles with unexpected or highly complex prompts

- Requires careful intent separation to avoid misclassification

Why We Chose NLU for Launch

- ServiceNow only supports one approach at a time (LLM or NLU)

- The upcoming July 2025 platform launch required stability and predictability

- NLU allowed us to ship research-backed, tested conversations with lower risk to student trust

- LLM improvements would require additional time beyond the project scope

Testing

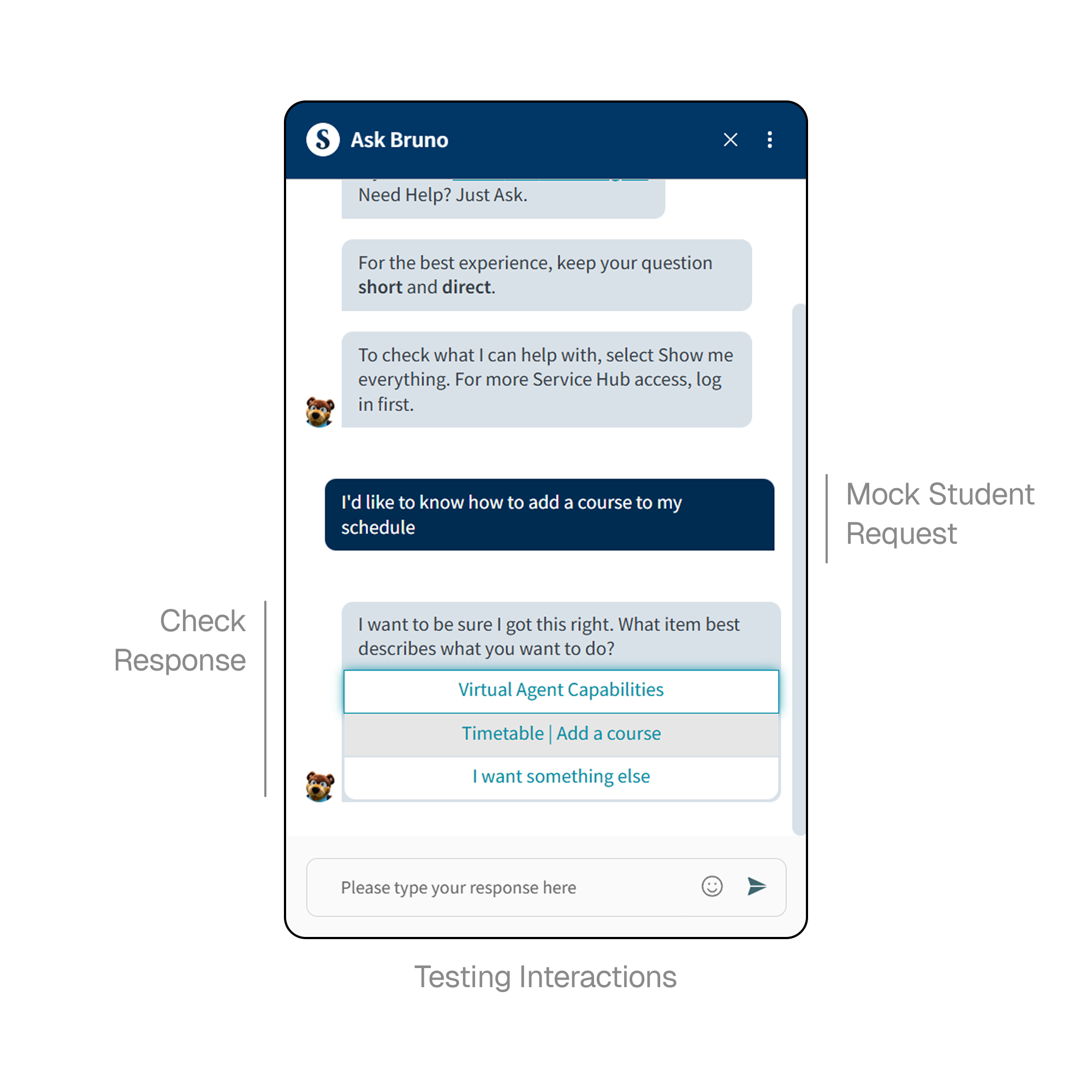

Seeing First Hand How The Chatbot Responds To 2000+ Prompt Tests

Chatbot Testing & Iteration Using Real Student Language

We continuously tested chatbot responses using real, anonymized student prompts to evaluate intent matching and response accuracy. Insights from each test informed iterative refinements to utterances, content, and topic structure, reinforcing a cycle of evidence-based improvement rather than one-time design decisions.

Screenshot of an example student prompt testing.

Impact

Real results for Sheridan students.

Elevated Experience, Measurable Change.

covering previously unmet conversational needs

with clearer, research-backed answers

and enhanced with concise, empathetic language, hierarchy, and personalization 3× through iteration

Reflection

Designing For Trust And Support

Reflection

This project reinforced that good UX, especially in AI-driven systems, is less about novelty and more about trust. Students often came to the chatbot during stressful or time-sensitive moments, which made clarity, empathy, and predictability just as important as correct answers. Working within real system constraints taught me how to make thoughtful tradeoffs that protect users while still improving the experience. It strengthened my belief that research-led design is essential when technology directly impacts people’s well-being.